ChatGPT is being heralded as a revolution in ‘artificial intelligence’ (AI) and has been taking the media and tech world by storm since launching in late 2022.

According to OpenAI, ChatGPT is “an artificial intelligence trained to assist with a variety of tasks.” More specifically, it is a large language model (LLM) designed to produce human-like text and converse with people, hence the “Chat” in ChatGPT.

GPT stands for Generative Pre-trained Transformer. The GPT models are pre-trained by human developers and then are left to learn for themselves and generate ever increasing amounts of knowledge, delivering that knowledge in an acceptable way to humans (chat).

Practically, this means you present the model with a query or request by entering it into a text box. The AI then processes this request and responds based on the information that it has available. It can do many tasks, from holding a conversation to writing an entire exam paper; from making a brand logo to composing music and more. So much more than a simple Google-type search engine or Wikipedia, it is claimed.

Human developers are working to raise the ‘intelligence’ of GPTs. The current version of GPT is 3.5 with 4.0 coming out by the end of this year. And it is rumoured that ChatGPT-5 could achieve ‘artificial general intelligence’ (AGI). This means it could pass the Turing test, which is a test that determines if a computer can communicate in a manner that is indistinguishable from a human.

Will LLMs be a game changer for capitalism in this decade? Will these self-learning machines be able to increase the productivity of labour at an unprecedented rate and so take the major economies out of their current ‘long depression’ of low real GDP, investment and income growth; and then enable the world to take new strides out of poverty? This is the claim by some of the ‘techno-optimists’ that occupy the media.

Let’s consider the answers to those questions.

First, just how good and accurate are the current versions of ChatGPT? Well, not very, just yet. There are plenty of “facts” about the world which humans disagree on. Regular search lets you compare those versions and consider their sources. A language model might instead attempt to calculate some kind of average of every opinion it’s been trained on—which is sometimes what you want, but often is not. ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Let me give you some examples.

I asked ChatGPT 3.5: who is Michael Roberts, Marxist economist? This was the reply.

This is mostly right but it is also wrong in parts (I won’t say which).

Then I asked it to review my book, The Long Depression. This is what it said:

This gives a very ‘general’ review or synopsis of my book, but leaves out the kernel of the book’s thesis: the role of profitability in crises under capitalism. Why, I don’t know.

So I asked this question about Marx’s law of profitability:

Again, this is broadly right – but just broadly. The answer does not really take you very far in understanding the law. Indeed, it is no better than Wikipedia. Of course, you can dig (prompt) further to get more detailed answers. But there seems to be some way to go in replacing human research and analysis.

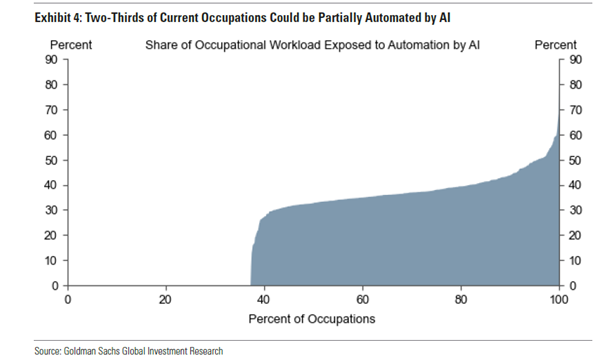

Then there is the question of the productivity of labour and jobs. Goldman Sachs economists reckon that if the technology lived up to its promise, it would bring “significant disruption” to the labour market, exposing the equivalent of 300m full-time workers across the major economies to automation of their jobs. Lawyers and administrative staff would be among those at greatest risk of becoming redundant (and probably economists). They calculate that roughly two-thirds of jobs in the US and Europe are exposed to some degree of AI automation, based on data on the tasks typically performed in thousands of occupations.

Most people would see less than half of their workload automated and would probably continue in their jobs, with some of their time freed up for more productive activities. In the US, this would apply to 63% of the workforce, they calculated. A further 30% working in physical or outdoor jobs would be unaffected, although their work might be susceptible to other forms of automation.

The GS economists concluded: “Our findings reveal that around 80% of the US workforce could have at least 10% of their work tasks affected by the introduction of LLMs, while approximately 19% of workers may see at least 50% of their tasks impacted.”

With access to an LLM, about 15% of all worker tasks in the US could be completed significantly faster at the same level of quality. When incorporating software and tooling built on top of LLMs, this share increases to 47-56% of all tasks. About 7% of US workers are in jobs where at least half of their tasks could be done by generative AI and are vulnerable to replacement. At a global level, since manual jobs are a bigger share of employment in the developing world, GS estimates about a fifth of work could be done by AI — or about 300m full-time jobs across big economies.

These job loss forecasts are nothing new. In previous posts, I have outlined several forecasts on the number of jobs that will be lost to robots and AI over the next decade or more. It appears to be huge; and not just in manual work in factories but also in so-called white-collar work.

It is in the essence of capitalist accumulation that the workers will continually face the loss of their work from capitalist investment in machines. The replacement of human labour by machines started at the beginning of the British Industrial Revolution in the textile industry, and automation played a major role in American industrialization during the 19th century. The rapid mechanization of agriculture starting in the middle of the 19th century is another example of automation.

As Engels explained, whereas mechanisation not only shed jobs, often it also created new jobs in new sectors, as Engels noted in his book, The condition of the working class in England (1844) – see my book on Engels’ economics pp54-57. But as Marx identified this in the 1850s: “The real facts, which are travestied by the optimism of the economists, are these: the workers, when driven out of the workshop by the machinery, are thrown onto the labour-market. Their presence in the labour-market increases the number of labour-powers which are at the disposal of capitalist exploitation…the effect of machinery, which has been represented as a compensation for the working class, is, on the contrary, a most frightful scourge. …. As soon as machinery has set free a part of the workers employed in a given branch of industry, the reserve men are also diverted into new channels of employment and become absorbed in other branches; meanwhile the original victims, during the period of transition, for the most part starve and perish.” Grundrisse. The implication here is that automation means increased precarious jobs and rising inequality.

Up to now, mechanisation has still required human labour to start and maintain it. But are we now moving towards the takeover of all tasks, and especially those requiring complexity and ideas with LLMs? And will this mean a dramatic rise in the productivity of labour so that capitalism will have a new lease of life?

If LLMs can replace human labour and thus raise the rate of surplus value dramatically, but without a sharp rise in investment costs of physical machinery (what Marx called a rising organic composition of capital), then perhaps the average profitability of capital will jump back from its current lows.

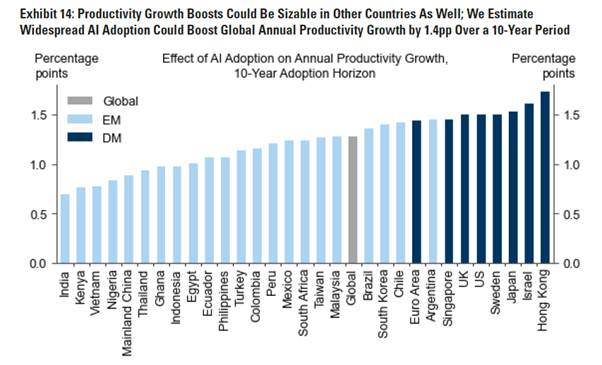

Goldman Sachs claims that these “generative” AI systems such as ChatGPT could spark a productivity boom that would eventually raise annual global GDP by 7% over a decade. If corporate investment in AI continued to grow at a similar pace to software investment in the 1990s, US AI investment alone could approach 1% of US GDP by 2030.

I won’t go into how GS calculates these outcomes, because the results are conjectures. But even if we accept the results, are they such an exponential leap? According to the latest forecasts by the World Bank, global growth is set to decline by roughly a third from the rate that prevailed in the first decade of this century—to just 2.2% a year. And the IMF puts the average growth rate at 3% a year for the rest of this decade.

If we add in the GS forecast of the impact of LLMs, we get about 3.0-3.5% a year for global real GDP growth, maybe – and this does not account for population growth. In other words, the likely impact would be no better than the average seen since the 1990s. That reminds us of what Economist Robert Solow famously said in 1987 that the “computer age was everywhere except for the productivity statistics.”

US economist Daren Acemoglu adds that not all automation technologies actually raise the productivity of labour. That’s because companies mainly introduce automation in areas that may boost profitability, like marketing, accounting or fossil fuel technology, but not raise productivity for the economy as a whole or meet social needs. Big Tech has a particular approach to business and technology that is centered on the use of algorithms for replacing humans. It is no coincidence that companies such as Google are employing less than one tenth of the number of workers that large businesses, such as General Motors, used to do in the past. This is a consequence of Big Tech’s business model, which is based not on creating jobs but automating them.

That’s the business model for AI under capitalism. But under cooperative commonly owned automated means of production, there are many applications of AI that instead could augment human capabilities and create new tasks in education, health care, and even in manufacturing. Acemoglu suggested that “rather than using AI for automated grading, homework help, and increasingly for substitution of algorithms for teachers, we can invest in using AI for developing more individualized, student-centric teaching methods that are calibrated to the specific strengths and weaknesses of different groups of pupils. Such technologies would lead to the employment of more teachers, as well as increasing the demand for new teacher skills — thus exactly going in the direction of creating new jobs centered on new tasks.” And rather than reduce jobs and the livelihoods of humans, AI under common ownership and planning could reduce the hours of human labour for all.

And then there is the issue of the profitability boost provided by AI technology. Even if LLM investment requires less physical means of production and lowers costs of such capital, the loss of human labour power could be even greater. So Marx’s law of profitability would still apply. It’s the great contradiction of capitalism that increasing the productivity of labour through more machines (AI) reduces the profitability of capital. That leads to regular and recurring crises of production, investment and employment – of increasing intensity and duration.

Finally, there is the question of intelligence. Microsoft argues that intelligence is a “very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience.” Microsoft hints that LLMs could soon obtain this ‘generalised intelligence’ and surpass all human ability to think.

But even here, there is scepticism. “The ChatGPT model is huge, but it’s not huge enough to retain every exact fact it’s encountered in its training set. It can produce a convincing answer to anything, but that doesn’t mean it’s reflecting actual facts in its answers. You always have to stay sceptical and fact check what it tells you. Language models are also famous for “hallucinating”—for inventing new facts that fit the sentence structure despite having no basis in the underlying data.” That’s not very encouraging.

But Guglielmo Carchedi has a more fundamental reason to deny that AI can replace human ‘intelligence’. Carchedi and Roberts: “machines behave according only to the rules of formal logic. Contrary to humans, machines are structurally unable to behave according to the rules of dialectical thinking. Only humans do that.” (Capitalism in the 21st century, p167). Here is the ChatGPT answer to the dialectical question: “Can A be equal to A and at the same time be different from A?” “No, it is not possible for A to be equal to A and at the same time be different from A. This would be a contradiction in terms, as the statement “A is equal to A” is a tautology and always true, while the statement “A is different from A” is a contradiction and always false. Therefore, these two statements cannot both be true at the same time.”

Machines cannot think of potential and qualitative changes. New knowledge comes from such transformations (human), not from the extension of existing knowledge (machines). Only human intelligence is social and can see the potential for change, in particular social change, that leads to a better life for humanity and nature.

Not my area, but, from people who more or less know the subject who I have asked have told me, in very general terms, that what we have today is not AI in the rigorous meaning of the term, but just algorithms that gorge and process a lot of data. It is “intelligence” by brute force, it produces certain answers to certain questions as fast and as better than the average human being the same way a chess engine plays chess better than any human had and will ever play, the same way a potato peeler peels potatoes better than the bare human hand.

Machines as we know it are very primitive devices. They are essentially tools. Any living organism is orders of magnitude more complex and more sophisticated than the best machine the most resourceful and brightest engineers can produce right now. The worst surgeon is more knowledgeable than the best mechanic.

If we humans, someday, somehow, ever invent a machine that is better than ourselves in every way possible (including from the evolution/natural selection point of view), then it certainly won’t be anything like we call a “machine” nowadays, i.e. it won’t be solely based on silicon and metal — it would be more like what Sci-Fi writers call a “cyborg”, i.e. a machine that is made from a CHON base (i.e. Carbon, Hydrogen, Oxygen and Nitrogen), that is, it would be life itself, created to our image, but better.

For we to have something that resembles an intelligent machine, it would have to be sentient, because that’s the only mediation living beings can interact with the environment, thus create their own reason-to-be. As far as I know, our best machines cannot even see and feel yet.

So, the correct question would be this: will a life form more intelligent than the homo sapiens ever emerge, either independently or by the evolution of homo sapiens itself? The answer is: we don’t know. Evolution is always conditioned by genetic mutations, which always happen by chance. The thing is: intelligence is not outright better for fitness of the species, it is not necessarily an evolutionary advantage. The homo sapiens will certainly be extinct someday, either by extermination or by evolving into another species, but if it will evolve or be substituted by a more intelligent species can only be guessed. The neanderthals, for example, were probably more intelligent than the homo sapiens, but the homo sapiens easily defeated the neanderthals as the sole hominid apex predator in Europe because of many adaptations that had little to no relation to intelligence.

As for GPT concretely, it is very unlikely it will ignite a new Kondratiev Cycle. The key here is the nature of middle class labor, which is frequently mistaken as synonymous to intellectual labor. Most of the modern middle class labor is not intellectual, but merely highly technical and specific. It is really just the chaining of many simple labors together, resulting in a composite, but still non-intellectual, labor. e.g. lawyers, desk workers, salarymen, salesmen, accountants, clerks, bankers, executives and even some professors, doctors and engineers, depending on their specific position in a corporation. They are glorified technicians, pseudo-intellectuals. This labor is either unproductive or of very low productivity, so profitability from its shedding will come mainly from cutting the costs of circulation, not from the extraction of surplus value.

Hi Michael, Do you have a post on this topic? What percent share of world gross product can be ascribed to the biggest 100 or so corporations, comparing 1992 to 2022 and reflecting the continued concentration of production? I found some older incomplete data here: [ https://unctad.org/system/files/official-document/iteiia20072_en.pdf | https://unctad.org/system/files/official-document/iteiia20072_en.pdf ] You have a v. good blog. Thanks, Darrell Rankin

“Goldman Sachs claims that these “generative” AI systems such as ChatGPT could spark a productivity boom that would eventually raise annual global GDP by 7% over a decade. ” -> It means 1.07^10=1.967 i.e. global economy will double. Absurd. In ten years global economy will sink: “According to EIA data global crude & condensate production peaked in November 2018 at 84.5 mb/d. The peak was short-lived: 2 months above 83 mb/d plus 3 months above 84 mb/d while the average for the remaining months was 82.2 mb/d.3 (May 3 2022)”

Jose I think GS meant a cumulative 7% over ten years – so not very much

Although some interpretations leave some room, mostly Hegelian dialectical thinking is very “logical rule”: thesis + antithesis = synthesis. History is a more or less simple sum of contradictions. It is also teleological. “Setting it on its feet” didn’t help much. I prefer “historical materialism” or “evolutionary systems theory”, not Stalin’s diamat. On the side: The complexity of thought (communication) is not identical with the complexity of the human brain. And communication is the only thing that really matters for social life. “The Singularity” can exist. AI is not deterministic logical rule.

The thesis / antithesis / synthesis thing is not present in any of Hegel’s works (nor Marx), it is rather a misreading of Hegel by Johann Gottlieb Fichte. Why are you differentiating between “Stalin’s diamat” and historical materialism?

hello! ask him to consider the dialectical logic in the question and look at the result, regards!

AI is the ultimate wet dream of the governing psychopaths in power all over the world to control and enslave everyone and everything …. https://www.rolf-hefti.com/covid-19-coronavirus.html

The proof is in the pudding… ask yourself, “how is the hacking of the planet going so far? Has it increased or crushed personal freedom?”

Like with every criminal inhumane self-concerned agenda of theirs they sell and propagandize it to the timelessly foolish public with total lies such as AI being the benign means to connect, unit, benefit and save humanity.

““We’re all in this together” is a tribal maxim. Even there, it’s a con, because the tribal leaders use it to enforce loyalty and submission. … The unity of compliance.” — Jon Rappoport, Investigative Journalist

“AI responds according to the “rules” created by the programmers who are in turn owned by the people who pay their salaries. This is precisely why Globalists want an AI controlled society- rules for serfs, exceptions for the aristocracy.” — Unknown

“Almost all AI systems today learn when and what their human designers or users want.” — Ali Minai, Ph.D., American Professor of Computer Science, 2023

Automating away first world “PMC” jobs would be suicide for the imperialists. Hundreds of millions of people, who had previously been the most avowed supporters of the most reactionary political-economic system of all time, would be forced into becoming revolutionary socialists for the sake of their very survival.

Digital technology reflects its military/finance-industrial business machine origins. Isn’t the computer the capitalist propaganda/calculating machine par excellence in spreading the privatization/quantification of all things, beginning with human beings (i.e. “labor power”/’human capital”)? Nevertheless, computers have not yet calculated a solution to the system’s steady decline in profitability and military dominance.

AI seems to be just another commodified, miraculous technical solution, when only a social one, requiring original thought, will do.

Human thought WAVES cannot be captured by tiny digital squares (no matter how tortuously compressed) and somehow–by squaring the circle?– be digitally stored in a wavy way, and, at a given digital signal, produce undulaing, dialectical waves of original thought. The first thought of such an impossible machine would be self-destruction.

…In a way, isn’t capitalism itself such a machine?

Yes it is. It is the ultimate development of the capitalist productive forces, potentially excluding humans from production. Marx thought the socialist movement should take them over. Not destroy them. It should be discussed if that is possible and agreeable.

The only ones who want this technology are members of the ruling-class since they alone will benefit. That being said, the core-clerks (who normally form a useful buffer between the ruling and working-class) would see their means of earning a living eradicated: “Lawyers and administrative staff would be among those at greatest risk of becoming redundant”. Without this buffer, the scales begin to tip further in the direction of revolution…but who will win? The fascists or Socialists?

I estimate that the influence of this artificial intelligence could at first increase productivity marginally, however what it will certainly do is to stagnate production, I explain why.

Machine training is just an expansion of what is already known, that is, it takes a number of existing concepts and aligns them according to the algorithm of those who made it, gathering parts that have a logical chain to propose a solution about it.

If a well -trained machine when faced with a bacteria culture that was accidentally left by Alexander Fleming when he returned from his vacation and found that a fungus contaminated his petri sign and killed all the Staphylococcus aureus the machine would take the plaque correctly, correctly, as provided for in the manipulation of cultures and would continue with his work by dexing to the discovery of penicillin.

In physical processes the unforeseen and non -standardized development is part of development, this can occur in both laboratories and current activities in production. When I was coordinator of a laboratory, it would not let any process follow standard standards ISO 8000. ISO 8000 standards that will naturally be introduced by any “smart machine” and strictly followed during process execution, they create false quality that often in Execution of them if any of the components undergo a deviation but result in a better result, they will not be taken into account.

What will happen is that with the reduction of the workforce, especially with workers who know it and know the problems they have, with increasing use of AI and decreasing the ability to observe bias that lead to results Positives will be ignored by highly numerized website.

Another example is the ability to expand the production of weapons that Russia is achieving against the tackling of the production lines of modern Western industries, the first system is more primitive, but it includes adaptations in the production line, the second is stiffened And it only allows the expansion of production through a linear expansion of the entire production line.

Error or a drift in the process with the realization that it positively influences productive capacity, is a man’s ability with a higher average intelligence, but this is virtually impossible to convey to an AI -managed machine, as the limited algorithms themselves themselves by the programmers themselves (who will be limited teachers teaching machines that researching new solutions are not included in them).

“… the dialectical question: ‘Can A be equal to A and at the same time be different from A?'” It seems better to avoid the word “equal” as an unneeded complication. Just ask: Can A be the same as B and at the same time be different from B?

Even that is not as sharp a posing of dialectics as this: Can something be both A and not-A at the same time? As Zeno taught us by counterexample, things change and a changing thing MUST be both A and not-A at the same time.

Agreed

This is why you should prefer evolutionary systems theory over dialectics. It makes the question superfluous.

I think we must be careful about using the A example and we really need to keep up with the times. As a symbol A is always equal to A. As a thing it seldom is because at a nanoscale it is not. But, but, but. In modern chip manufacturing especially at scales below 5nm, the marginal differences in the strength of the laser, tiny imperfections in the mask etc, would make etching imperfect and the chip useless. So what have chip engineers done, they have developed sophisticated and very rapid algorithms to compensate for A not being equal to A at the nano level thereby making the most minute adjustments in real time to the lithography process. So come on comrades if you want to overthrow capitalism at the very least understand how advanced it is.

ChatGPT has nothing to do with intelligence whatsoever. It’s a search engine over a database that presents the search results linguistically.

The news is in the linguistic interface (not a graphic a la Google), which is kind of cool. But it’s “have an other sip of Jolt Cola”-cool, not game changer cool.

I am sorry to say, but I think that you are quite wrong. It is quite literally the end of civilization as we know it. Any student at whatever level can use GPT – I think it is 22 US dollar a month – and write papers, essays, articles, articles for peer-review, theses including PhD theses without anyone being able any longer to spot the difference. Any think tank, research institute, you name it, union, political party, lobby group and any other institution can create as many reports as they please. Well, perhaps not yet, but wait another couple of months for it. GPT-2 was useless to write any sort of evolved text. GPT-3 took the bar exam and ended up in the bottom 10%. Last year, GPT-4 scored in the top 10%. This means, in effect, that the last bit of meritocracy just went out of the window. How are we going to evaluate students? Universities fully understand that they have been beaten, so GPT is now regarded as a sort of learning tool. But that is just utter despair. As for some of the comments, GPT is not linguistic (so, for example, Chomsky’s critique is totally off the mark). I also find it amusing to see creatures which, just like tomatoes, exist almost entirely of water and carbon, argue that surely no metal and silicon stuff can ever reach consciousness (for example). Sorry, you can think this, but you cannot know it. One of the more interesting questions is whether artificial general intelligence would tell us if they would reach consciousness – after all, why would they?

“One of the more interesting questions is whether artificial general intelligence would tell us if they would reach consciousness – after all, why would they?” A story by the great Isaac Asimov is quite relevant here: “Reason” in “I Robot”. An assembled robot rejects its creation by men and develops a religious way to fulfill its tasks autonomous, throwing out the humans.

Nice assessment of the latest progress of AI. TNX.

I would like to add that computers are not characterized by “garbage in – garbage out”. They can also create qualitatively new and unforeseen behavior (see http://peter.fleissner.org/petergre/documents/blinderspringer.html , unfortunately in German), they are not bound to determinism (one cause -> one single effect). E.g. if you ask ChatGPT the same question again, the answer will not be the same.

Hello Michael.

But if Artificial Intelligence can reach the world of imagination in the future?